A multi-arm bandit is a slot machine containing N arms, each of which has a fixed (and different) probability of winning. If each arm pays off the same amount when it wins, the learning problem presented by a multi-arm bandit concerns the tradeoff between exploration and exploitation. A learning rule which is too hot may lock on to a suboptimal arm simply because of a lucky winning streak that occurs early on in the exploration phase. A learning rule which is too cold may never settle down to playing only the highest probability arm because it continues to explore.

If we interpret "winning" as "making a successful prediction", a multi-arm bandit may be thought of as a formal model of choosing between competing scientific theories. After all, we don't really know whether any of our scientific theories are really true - all we know is the extent to which they enable us to make correct predictions about the future.

Zollman (2007) uses multi-arm bandits to model competing scientific research programmes. Whereas Zollman considers multi-arm bandits having a fixed number of arms (representing, for example, the set of theories which are currently "on the table"), this model uses variable-arm bandits to model the search for new scientific theories which are potentially more successful than our current theories.

A variable-arm bandit is a slot machine which may have any (countable) number of arms, between 0 and infinity. In each iteration of play, a scientist may take one of the following actions:

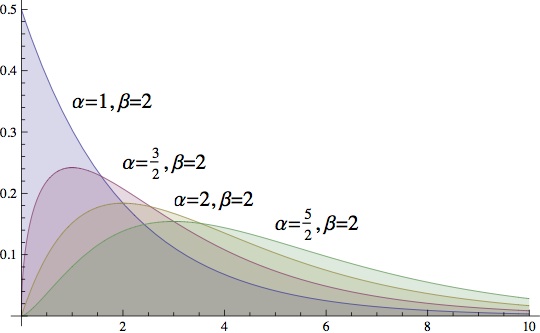

- Attach a new arm to the bandit. (We assume a newly attached arm is randomly assigned a probability of winning. This probability is held constant the entire time the arm is attached to the bandit.)

- Remove an arm from the bandit.

- Pull a selected arm.

In the case of variable-arm bandits, the learning problem now concerns the tradeoff between exploitation, exploration and innovation. The question is, can boundedly rational scientists eventually work their way, by attaching arms and exploring their success, to ultimately settling on pulling an arm that has a probability of winning arbitrarily close to 1? This would correspond to settling on a scientific theory which always (or almost always) makes successful predictions.

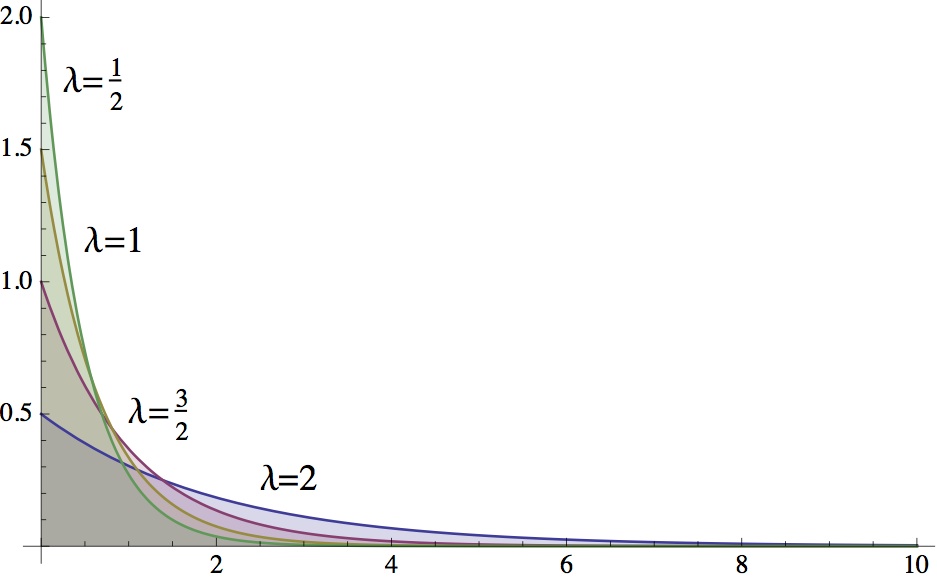

Here, we assume that scientific researchers are boundedly rational and learn via reinforcement learning. The reinforcement learning occurs via an urn model, specifically a Hoppe-Polya urn. This is an urn which begins with a single black ball inside. Now suppose a scientist draws a ball from the urn.

- If the black ball is drawn, the scientist attaches a new arm to the bandit and pulls it. If she wins, he colour-codes the arm (with a unique colour) and adds a ball of that colour to the urn. If she does not win, she removes the arm and throws it away. In all cases, the black ball is returned to the urn as well.

- If a coloured ball is drawn, the scientist pulls the arm of the bandit with the corresponding colour. If she wins, she reinforces by adding another ball of the same colour to the urn. (The ball drawn is always returned to the urn regardless of whether of whether the pull results in a win.)

That's the basic model. Now, one can prove that, in the limit, the standard Hoppe-Polya urn will eventually end up containing infinitely many colours. Hence, the scientist will, in the limit, attempt to attach infinitely many arms to the bandit. Consider now the following questions: will such a scientist end up learning to play an arm with a probability of winning arbitrarily close to one? If so, is it possible for social dynamics to help a population of scientific researchers achieve consensus on an optimal theory (or at least a better theory) more quickly than individuals working in isolation may do so?